Recently I’ve spend significant amount of time on playing with AWS Lambda functions triggered from SQS queue. You can read the outcome of it in the series of posts:

AWS Lambda events with Severless Framework

Error handling in AWS Lambda triggered by SQS events

One of the benefits of Lambda function is self-scaling.

Given the default settings they can scale up to the limits of user account which is 1000 concurrent invocations per region.

This is the limit for all Lambda functions running in the given account, not a single function, so if you have multiple applications running in the same account they will consume common resources. This is not always desired.

I believe having more control over the resources is important for resilient system.

One of such properties of Lambda function is a timeout which allows you to kill functions which took more time than expected, e.g. hanging in some unrecoverable state.

Another, which allows controlling of concurrent invocations of Lambda on function level, is ReservedConcurrentExecutions property in CloudFormation or reservedConcurrency in serverless.yaml, e.g.:

functions:

hello:

handler: handler.hello

name: ${self:provider.stage}-lambdaName

timeout: 10

reservedConcurrency: 5

Such Lambda will be killed if running longer than 10 sec. Maximum of 5 instances running concurrently are allowed.

Let’s do some test to prove it.

First, we’ll use an example of Lambda function triggered from SQS queue from my previous post and send some messages.

This should trigger invocation of Lambda. We will put some sleep time in the function to allow AWS to spin more instances while there are messages to process in the queue.

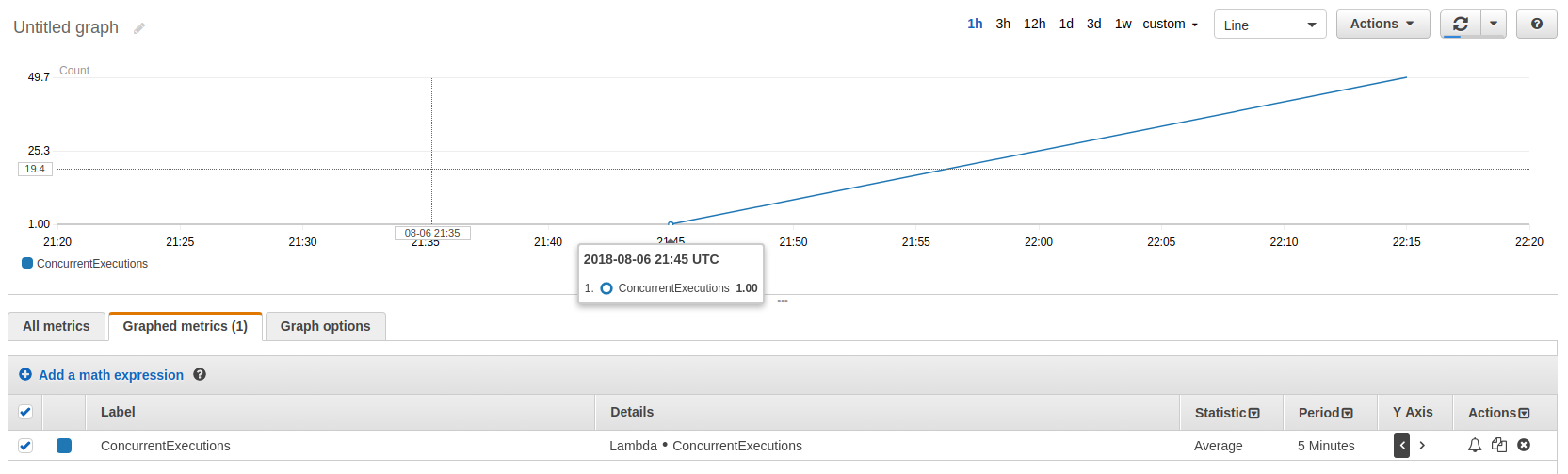

We should see that without the concurrency limit AWS will continue to start more instances of the function.

Here is an example code:

// receiver.js

exports.handler = (event, context, callback) => {

console.log("Start");

const interval = setTimeout(() => {

console.log("Wake up!");

clearInterval(interval);

console.log("End");

callback(null, "");

}, 10000); // delay 10 sec

};

And no limit is set on lambda concurrency:

receiver:

handler: receiver.handler

timeout: 30 # timeout in sec, default is 6

events:

- sqs:

arn:

Fn::GetAtt:

- MyQueue

- Arn

- batchSize: 1 # default and max is 10

The whole source code is in Github repository.

We have specified batchSize of 1 to not allow fetching multiple messages at a time by a single Lambda instance. This will give us more concurrent instances with less messages in the queue.

In one terminal we will listen on logs from CloudWatch:

sls logs -f receiver

In the other we will generate some messages to processs. We can use a simple bash script:

export QUEUE_URL=`aws sqs get-queue-url --queue-name MyQueue --query 'QueueUrl' --output text --profile=sls`

for run in {1..100}

do

aws sqs send-message --queue-url ${QUEUE_URL} --message-body "test" --profile=sls

done

This will send total of 100 messages to the SQS queue which will start triggering Lambda functions. If you like, you can run this script in multiple terminals to generate more messages concurrently.

If we go to receiver lambda function in AWS console and check metrics we would see how the number of concurrent Lambda functions grows up and then scales down to the initial number.

Let’s see what happens if we set the limit.

receiver:

handler: receiver.handler

timeout: 30 # timeout in sec, default is 6

events:

- sqs:

arn:

Fn::GetAtt:

- MyQueue

- Arn

- batchSize: 1 # default and max is 10

reservedConcurrency: 5

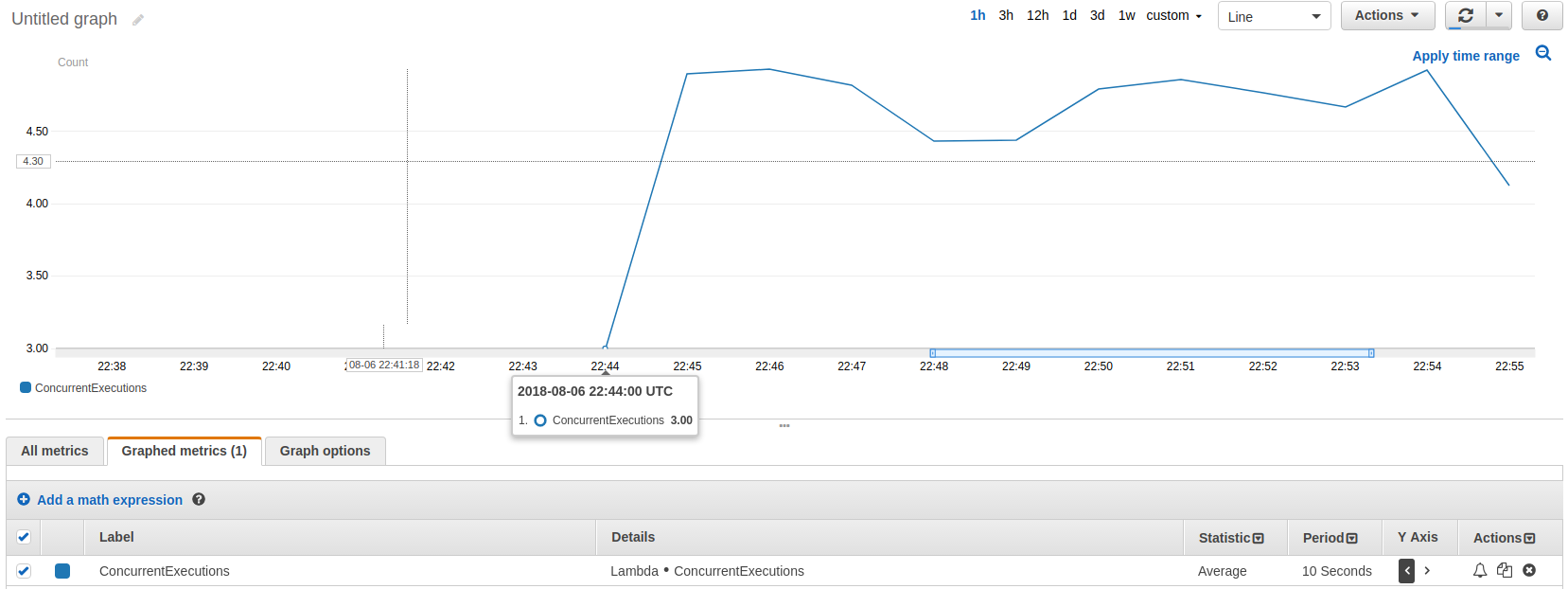

Now the number of concurrently running functions is constant which can be observed on the chart:

Takeaways:

- even though Lambda can scale itself we have possibility to control this with upper limit

- it is good to set timeout on Lambda function to kill tasks running longer than expected

- if Lambda is triggered from SQS we can use

batchSizeto limit number of messages processed in single Lambda invocation